Abstract¶

Knowledge graphs are the primary method of storing data in a rapidly increasing number of domains. The quality and structure of knowledge graphs determine the ability to produce insights. The efficiency of natural language processing algorithms play the key role. Predefined knowledge models have been a useful tool for organizing data, however, they are limited in their ability to capture emerging complexity. This paper proposes the use of purely machine generated knowledge model as a potential solution to create frictionless interoperability for all data users.

Introduction¶

Data is becoming an increasingly valuable commodity that drives innovation, research, and development across all industries. Knowledge graphs are the primary method of storing this data in a rapidly increasing number of domains. The quality and structure of knowledge graphs determine the ability to extract insights from data. The accuracy and efficiency of natural language processing algorithms play an important role in this context. While predefined knowledge models have been a useful tool for organizing data, they are limited in their ability to capture the complexity and nuance of relationships between concepts. This essay explores the possibility of creating knowledge graphs by developing an alternative approach for knowledge model engineering. It proposes the use of purely machine generated knowledge model as a potential solution to create frictionless interoperability for all data users. This approach allows for the creation of knowledge structures that are not bound by the constraints of predefined templates, and can instead be tailored to the specific needs of a given use case.

Problem Definition¶

The problem can be distilled to finding a solution to developing knowledge models extremely quickly, when new scientific discoveries make yesterday’s understanding obsolete in a matter of months, if not weeks. Sections below serve to support this view.

Data Exchange Standards as Key Value Drivers¶

The emergence of the Internet age can be attributed to multiple factors. As Russell (2014) notes in his book "Open Standards and the Digital Age", the development of communication standards stands out as a crucial catalyst. Much of the advancement can be traced back to the development of graph theory, which has facilitated the creation of highly efficient search algorithms, social networks, and recommendation systems that harness the power of graph analysis. In the 1990s, seminal work "Cybernomics" by Barlow (1998) laid the groundwork for the information economy by outlining its key implications. The one that especially stands out is that “relationships replace things” – that a relationship is represented by an “active flow of information, the greater the flow the more valuable is the relationship.” The other key observation is that “transaction becomes continuous.” Three decades after the publication of “Cybernomics” we see the rise of the social networks. The other prediction – about continuous transactions – manifests itself in a multitude of various subscription models, and particularly in software as a service (SaaS) platforms, which tends to attract more and more companies in the IT industry. Thus, the flow of data is crucial for the economy and the creation of value. In the field of economics, the creation of value is attributed to labor, capital, and total factor productivity. The latter measures the overall efficiency of an activity. As we live in the information economy where the value of services prevails over the value of products, the total factor productivity plays a key role. At the same time, analysts, data scientists, consultants and other technical experts are well aware that roughly 80% of their time is spent transforming data into a format that is amenable for analysis. Thus, there is a growing need to find a solution to the multitude of standards used for data exchange, to optimize inefficiencies. It is worth noting that around 30% of the world’s data being generated daily is healthcare data, and it is growing at a rate of 36% per year Podcasts, 2022, making it the best candidate for experimenting with new approaches to data processing. By streamlining data exchange standards, technology companies can unlock value in previously untapped areas of knowledge.

Future Shock and Language Singularity¶

Before the term “singularity” became a household item, a common term was “future shock” coined by Alvin Toffler in the book by the same title Toffler, 1972. Back in 1970, he observed an ever-increasing acceleration of change and information overload. Today the future shock has finally caught up with our language. The number of item sub-categories that get manufactured or provided as a service per unit of language increases; therefore, as the usage of any word becomes more frequent, its meaning collapses to the most frequent association not necessarily reflecting its “yesterday’s original meaning.” As a result, unification of standards is a challenge in many industries. The recent developments with large language models (LLMs) Touvron et al., 2023 make this problem especially difficult in knowledge management. It can be argued that LLMs will produce a lot of noise and exacerbate the biases present in the data on which they were trained, distorting the language further. The environment of current data formats can be described by Volatility, Uncertainty, Complexity, and Ambiguity (VUCA). VUCA is a concept that originated in 1987 based on the leadership theories by Warren Bennis and Burt Nanus Bennis & Nanus, 2003. The concept was quickly adopted by the field of military strategy and has since been applied to various contexts. A VUCA world is one that is characterized by rapid and unpredictable change, where traditional approaches to problem-solving and decision-making may no longer be effective. Organizations and individuals must be adaptable, resilient, and able to navigate complexity and ambiguity in order to succeed. The concept is also used to refer to the state of the global economy and challenges organizations face in the 21st century.

Problem Analysis¶

Two Key Problems with the Language Itself¶

First, the nature of all formal as well as natural human languages is different from the nature of reality. According to the work of Nalimov (1981), the key characteristic of any language whatsoever is its discrete nature. At the same time, Nalimov points out the continuous nature of reality which creates some unresolvable issues with the use of a language to describe the world around us.

Secondly, the natural language as a formal logical structure, is either internally inconsistent or incomplete if viewed as a closed set of rules. The Incompleteness theorems of 20th century logician Kurt Gödel demonstrated that paradoxes or contradictions are inevitable in any closed logical system. In other words, any set of logical rules can form statements that are either contradictory or unprovable within the set of those rules.

Ontologies as a Meta-Language¶

Ontologies came out of the branch of philosophy known as Metaphysics, which deals with the nature of reality and existence. Since the 1970s, researchers recognized the need to develop ontologies for knowledge engineering and powerful AI algorithms. Ontology can be defined as “a representational artifact, comprising a taxonomy as proper part, whose representations are intended to designate some combination of universals, defined classes, and certain relations between them” Arp et al., 2015.

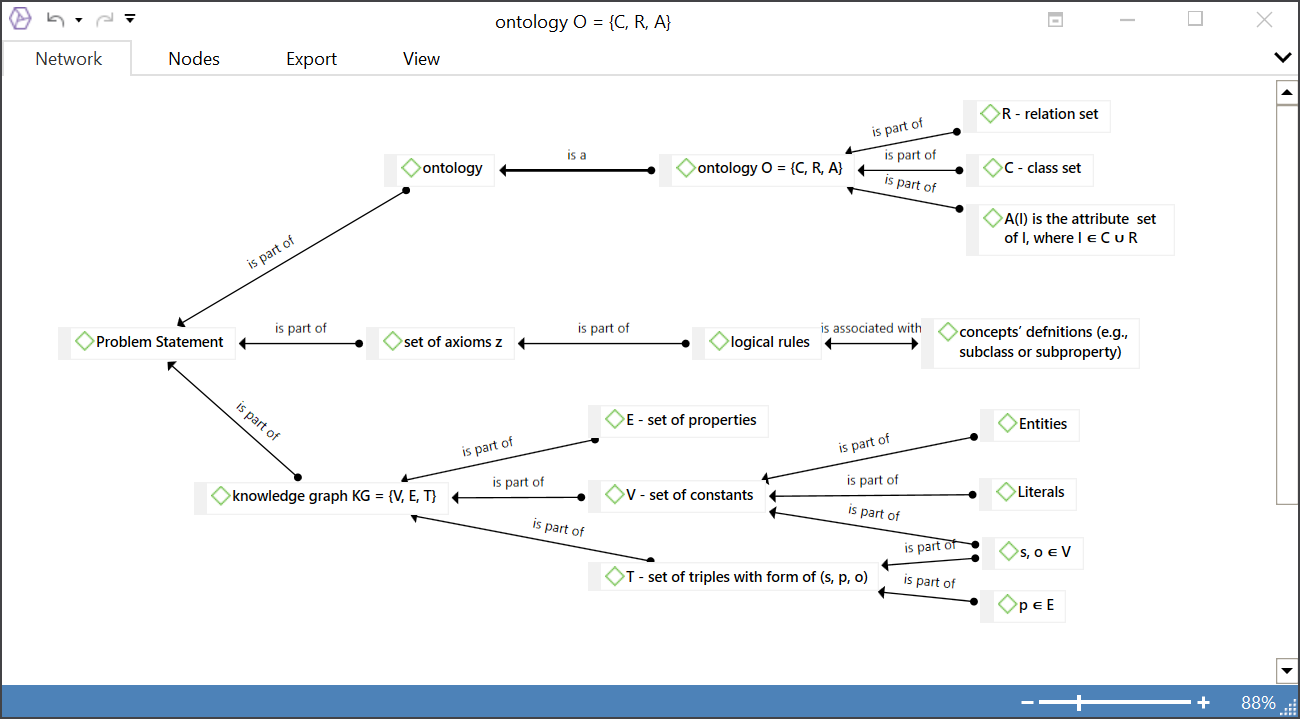

To avoid confusion from mathematical jargon, a figure representing the relationships between components of ontologies is provided. Figure 1 shows a concept map that visually explains what ontology is as well as demonstrates its relation to a knowledge graph from the point of view of computer science adopted from the work of Huang (2022).

Figure 1:Ontology and Knowledge Graph Model Concept Map

Common Methods of Ontology Engineering¶

Contemporary literature provides a broad range of approaches to ontology engineering. Among multiple ways to classify those categories are “top-down” and a “bottom-up” approaches Keet, 2018. While the top-down methodology emphasizes the reuse of existing domain ontologies and upper-level ontologies, the bottom-up methodology is used to specifically reveal the “possible semantics of the data” on which the ontology is constructed Lopez-Pellicer et al., 2008. However, the discussion of this matter at length lies beyond the scope of this particular work, the goal of which is to build a case to support the hypothesis proposed in the next section.

Proposed Solution¶

Bottom-Up Approach to Ontology Construction¶

According to the analysis of Keet (2018), the modern bottom-up approaches to generating ontologies range from fully manual to almost fully automated. The almost fully automated approaches rely on natural language processing algorithms; however, that method is only used as the last resort due to the ambiguous nature of language Keet, 2018. The documented approaches rely on highly granular automation, for example, a part-of-speech tagger is used as a separate step.

At the same time, this work proposes a fully automated approach. The author of this essay learned a number of lessons to automate named entity recognition and relation extraction from academic medical research articles and finally found a solution by fully switching to an advanced LLM (GPT-3). This solution is a generalization of lessons learned.

A Synthetic Ontology¶

The proposed ontology engineering approach is fully automated. First, a series of prompts must force an LLM to produce a hierarchy of terms. Next, another series of prompts must extract relations between appropriate pairs of those terms in the hierarchy. The prompts must allow for a limitation of the memory “window” of an LLM which has to fit both the prompt and the generated text.

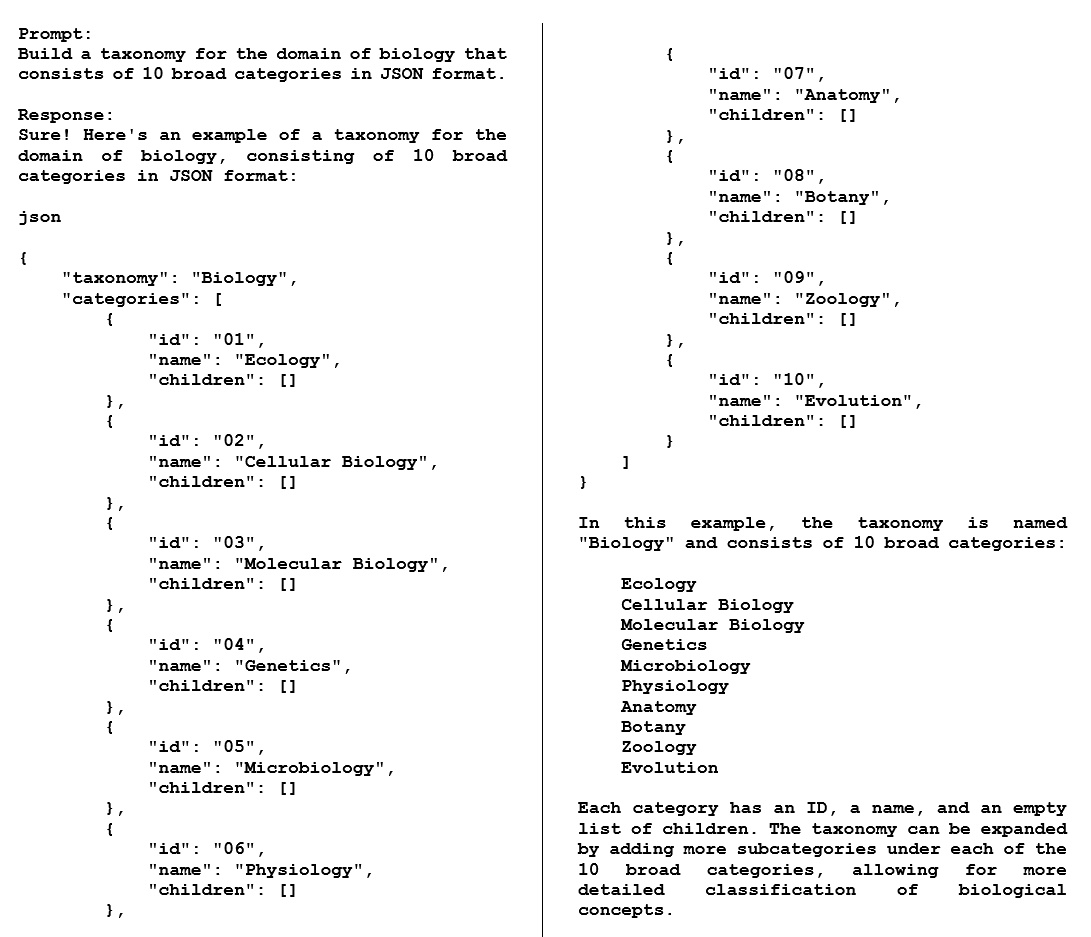

Please see Figure 2 below for a demonstration of a basic hierarchy with just one level. Each item of the hierarchy of terms can be expanded via additional prompt to an LLM to produce the next level components of the hierarchy until as much detailed components are provided as necessary.

However, despite this temporary difficulty, the benefits of this bottom-up approach are significant. Synthetic ontology engineering allows for the creation of more comprehensive and accurate knowledge graphs that can be personalized to the specific needs of individual users. It can capture more complex relationships between concepts, and can be updated and refined over time as new data becomes available, making it more accurate and always up-to-date. Additionally, the approach is scalable and adaptable to new contexts and data, making it well-suited for applications in knowledge domains where the change is especially fast. Thus, the author proposes that the solution rests on the following key processes:

- Storage of context, such as text or graphics, along with a term from an ontology.

- Automatic generation of ontologies based on the context and the right prompt for an LLM.

- Human verification test, which can later be automated.

- Test of internal consistency.

- Test of domain coverage.

- Control over versions.

Preliminary Results¶

To test the proposed method, some general LLMs were used: GPT-NeoX-20B and GPT-3.5 (“ChatGPT”).

Model GPT-NEOX-20B: The model shows basic rudimentary internal consistency given prompts of sufficient lengths. It can generate general statements about ontologies and very rudimentary, very general high level conceptual structures. However, it fails to generate statements in JSON format, as prompted.

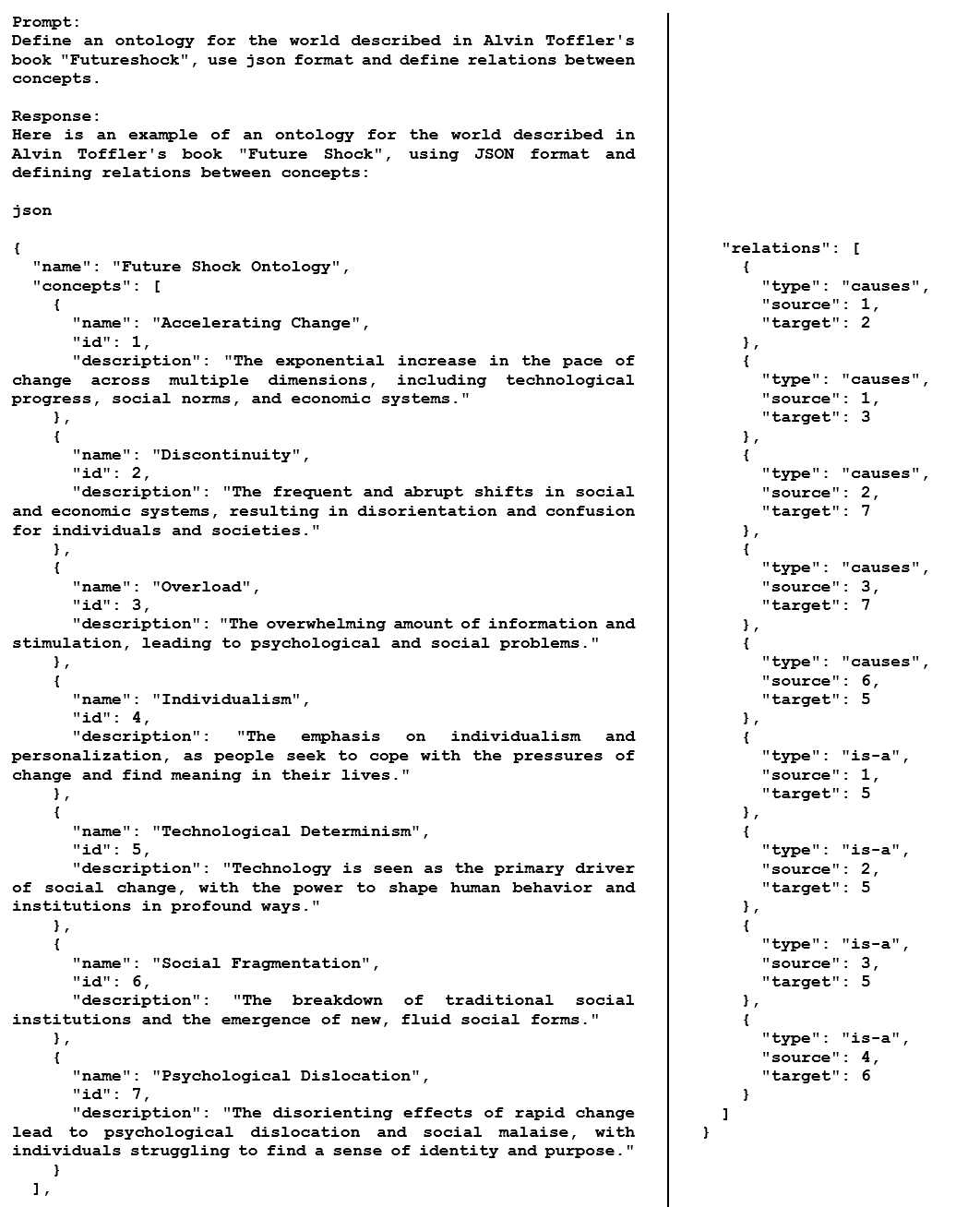

Model GPT-3.5: The model provides sufficiently well-formed answers in JSON format as prompted, correctly specifying ontologies. Please see examples in Fig. 2 and 3 below. One can easily imagine how it might be possible to generate an ontology having processed a corpus of texts describing some knowledge domain. An interesting artifact is that despite being an autoregressive LLM, this model can make “forward-looking” links for text it has not yet generated.

Figure 2:An Example of the Taxonomical Part of an Ontology for the Field of Biology in JSON Format

In this example, an LLM generated the first level taxonomy. Given the right prompt, it can add relations. Obviously, the model is limited by the size of its “working memory”; however, this limitation can be in part circumvented by prompting the model to provide taxonomy and relations separately. Likewise, each section can be expanded by providing a specially-prepared prompts.

In Figure 3, even though it is not technically an ontology, you can see how concepts and their relations are listed in the same JSON output. Given sufficient number of carefully engineered prompts, it might be possible to extract the structure of any knowledge domain and convert it into an ontology.

Figure 3:A Sample of Raw LLM-Generated Output in JSON Format

Future Work¶

There is a “Cambrian explosion” of prompts people share online. This primordial soup of prompts appears to be a new language, a meta-language. One of the avenues to develop synthetic ontologies might be to crowdsource the most effective prompts as well as construct an open source system for processing the output from such prompts.

Furthermore, the current understanding of the solution has only been done at a conceptual level. The approach needs a thorough mathematical treatment. Both the logic behind the approach as well as mathematical representation have to be properly addressed in the future work.

As LLMs get an ever-larger operating “memory,” it will be increasingly easier to develop an approach to join the ontology generated by the LLM piece by piece into one non-contradictory and fully exhaustive whole.

The currently available LLMs can handle the number of tokens which is already sufficient to create a small ontology piece by piece. This number is expected to grow significantly, which will enable processing of full ontologies at once.

Conclusion¶

As data continues to be a driving force in the digital economy, it is critical that we develop effective tools for organizing and interpreting it. Synthetic ontology engineering may become a significant step forward in this regard. This approach allows for the creation of ontologies that are not bound by the constraints of predefined structures, and can instead be tailored to the specific needs of a given use case. To test the hypothesis proposed in this essay, the best way is to choose the field of life sciences as that is a complex and challenging field. A preliminary demonstration has been provided in this work. While more research is required and this is still a hypothesis that needs to be tested and validated further, it is a promising solution for the future of knowledge management. Thus, the time used for data conversion can instead be spent on more valuable activity to unlock immense value for the economy.

The global civilization has come a long way from the first stone tools until it built the first microscope. In the same vein, the currently available LLMs represent a relatively blunt technology. We still have to learn to use this tool before we are able to develop a meta-language for programming LLMs. Thus, developing protocols to build synthetic ontologies might be the first step toward to that effect.

Afterword¶

Researchers and organizations in the field of knowledge management are welcome to our channel “Connected Science” { https://discord.gg/fMa35fS2 } to join a strong network of like-minded individuals that can help you achieve your goals and bring your projects to fruition.

About the Author¶

Alexander Bikeyev is a consultant who over the past 20 years professionally engaged in qualitative data analysis over multiple disciplines: economics, corporate finance, investments, management, organizational behavior, and biotech. His passion for extracting valuable insights from complex data sets has led him to develop a prototype of a solution to address the evolving needs of organizations.

Alex founded a platform { https://soma.science } built as a causal graph database. This proof-of-concept prototype can serve as the base layer to develop a synthetic ontology to demonstrate the potential of a more flexible and adaptable approach to managing knowledge. The current version of the platform aims to reduce time necessary to test causal hypotheses.

Acknowledgments¶

The author wants to extend his gratitude to the reviewers of this paper especially thanking Tyler Procko

{ https://www.procko.pro } for his helpful suggestions.

- Russell, A. (2014). Open Standards and the Digital Age: History, Ideology, and Networks (Cambridge Studies in the Emergence of Global Enterprise). Cambridge University Press.

- Barlow, J. (1998). Cybernomics: Toward A Theory of Information Economy.

- Podcasts, A. (2022). Ep 30: Unlocking the power of health data.

- Toffler, A. (1972). Future Shock by Alivin Toffler. Bodley Head.

- Touvron, H., Lavril, T., Izacard, G., Martinet, X., Lachaux, M.-A., Lacroix, T., Rozière, B., Goyal, N., Hambro, E., Azhar, F., Rodriguez, A., Joulin, A., Grave, E., & Lample, G. (2023). LLaMA: Open and Efficient Foundation Language Models.